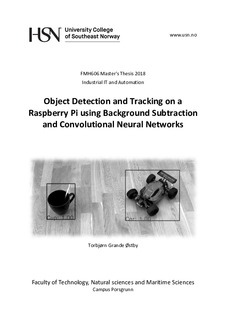

Object Detection and Tracking on a Raspberry Pi using Background Subtraction and Convolutional Neural Networks

Master thesis

Permanent lenke

http://hdl.handle.net/11250/2567353Utgivelsesdato

2018Metadata

Vis full innførselSamlinger

Sammendrag

Object detection and tracking are key features in many computer vision applications. Most state

of the art models for object detection, however, are computationally complex. The goal of this

project was to develop a fast and light-weight framework for object detection and object tracking

in a sequence of images using a Raspberry Pi 3 Model B, a low cost and low power computer.

As even the most light-weight state of the art object detection models, i.e. Tiny-YOLO and

SSD300 with MobileNet, were considered too computationally complex, a simplified approach

had to be taken. This approach assumed a stationary camera and access to a background image.

With these constraints, background subtraction was used to locate objects, while a light weight

object recognition model based on MobileNet was used to classify any objects that were found. A

tracker that primarily relied on object location and size was used to track distinct objects between

frames.

The suggested framework was able to achieve framerates as high as 7.9 FPS with 1 object in the

scene, and 2.9 FPS when 6 objects were present. These values are significantly higher, more than

7 times for 1 object and 2.6 times for 6 objects, than those achieved using the mentioned state of

the art models. This performance, however, comes at a price.

While the suggested framework was seen to work well in many situations, it does have several

weaknesses. Some of these include poor handling of occlusion, a lack of ability to distinguish

between objects in close proximity, and false detections when lighting conditions change.

Additionally, its processing speed is affected by the number of objects in an image to a larger

degree than what the state of the art models are. None of the mention models have deterministic

processing speeds